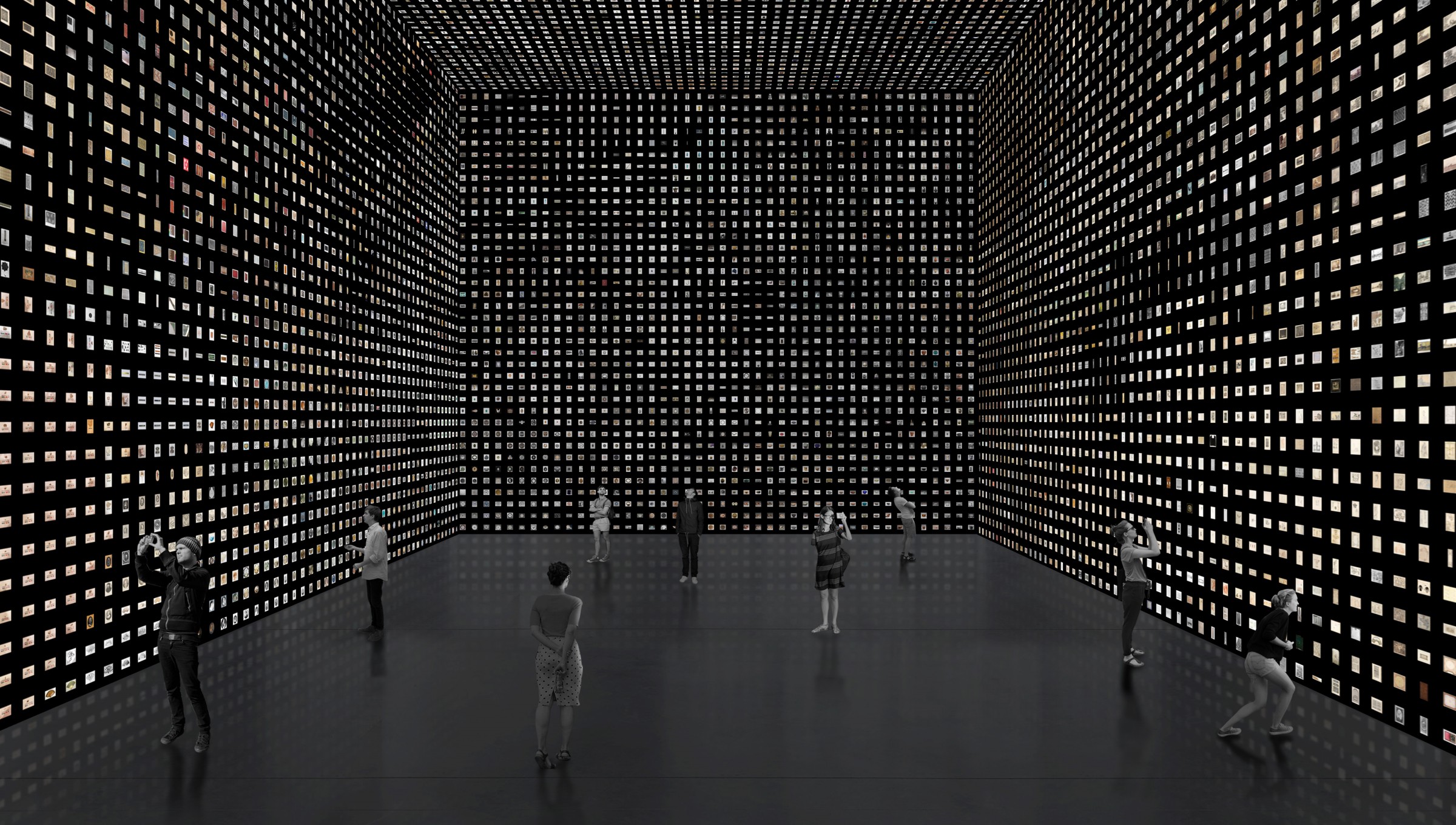

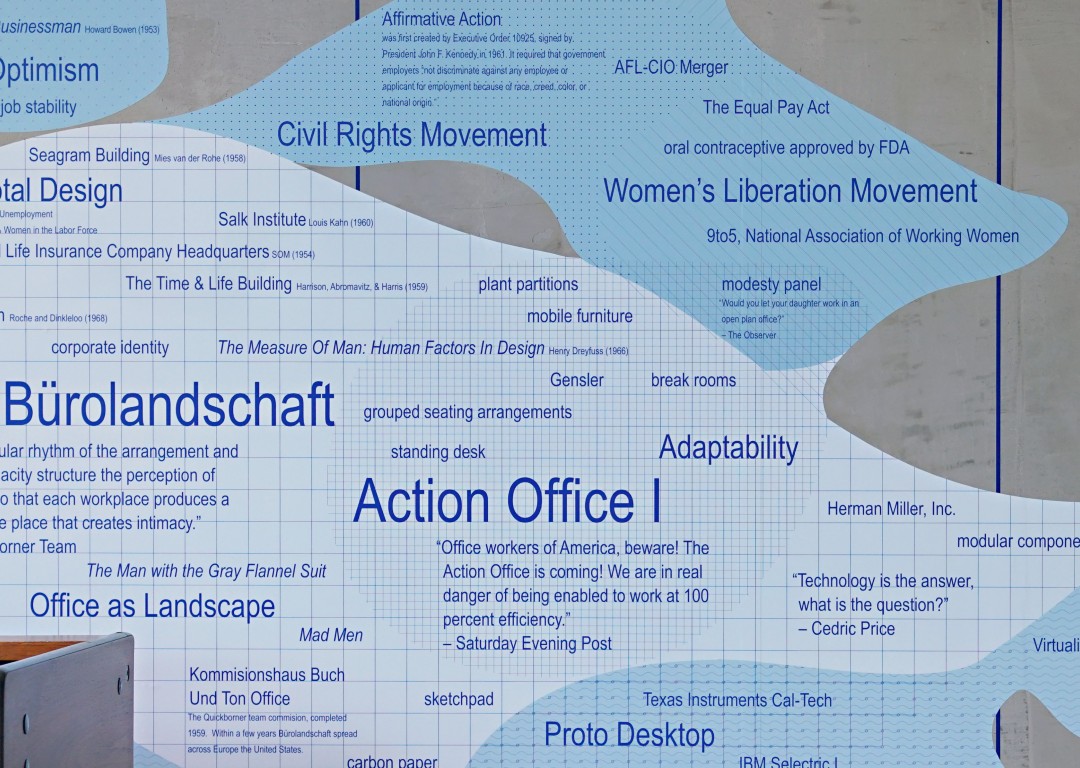

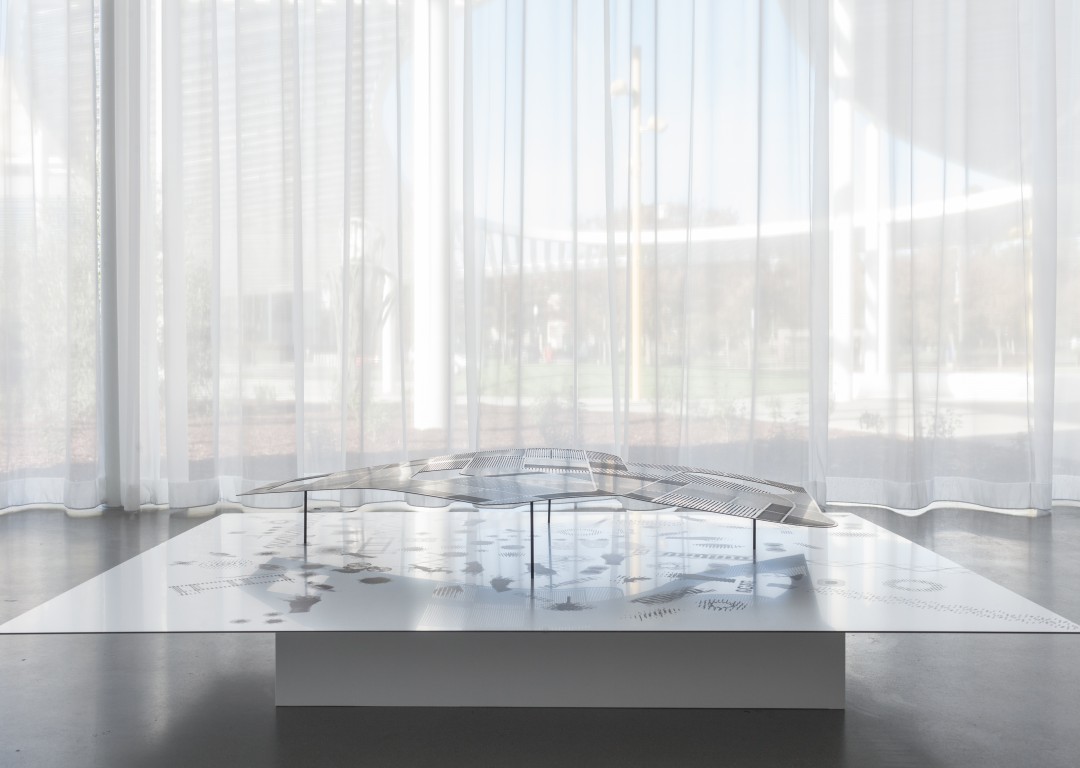

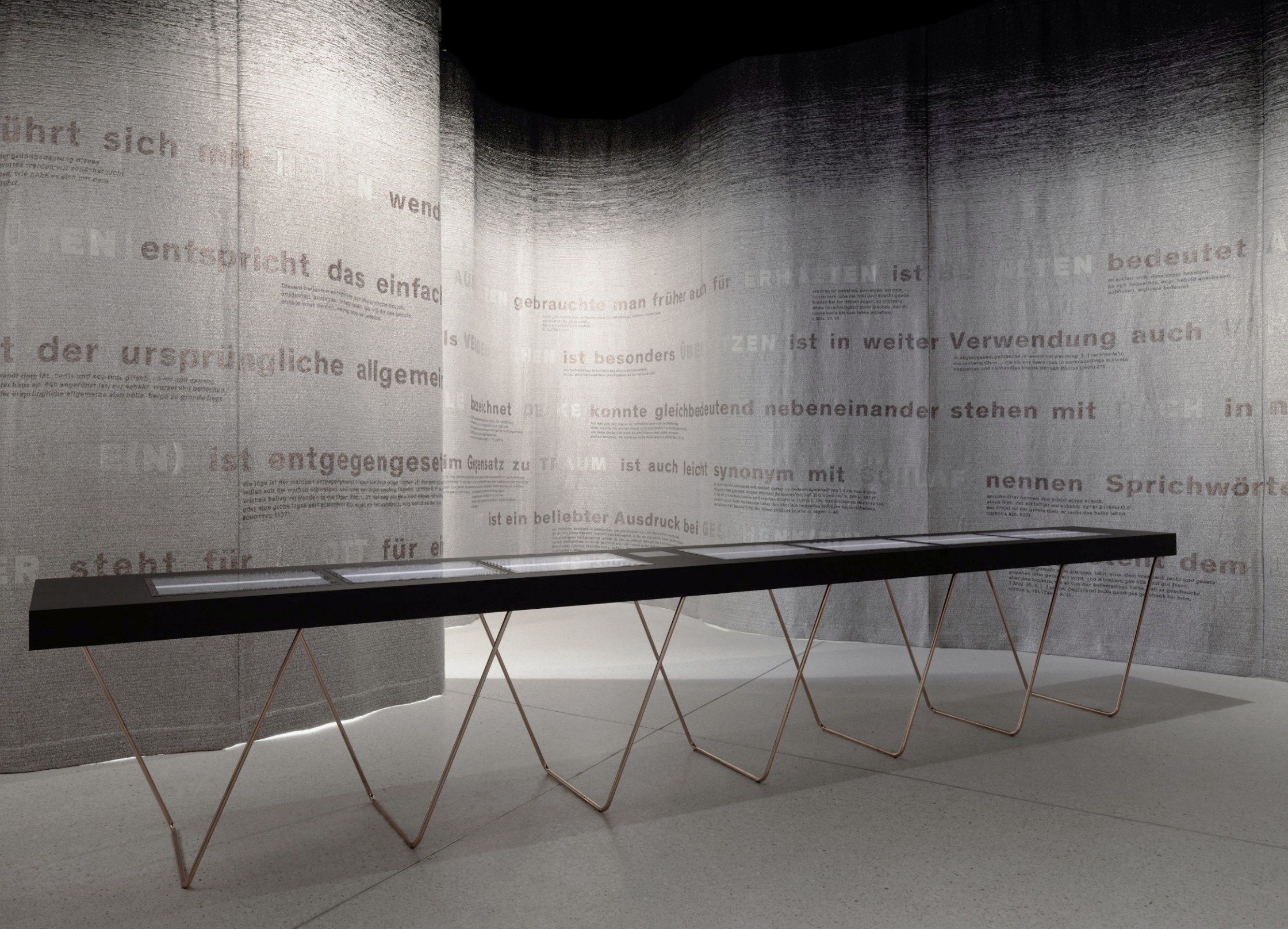

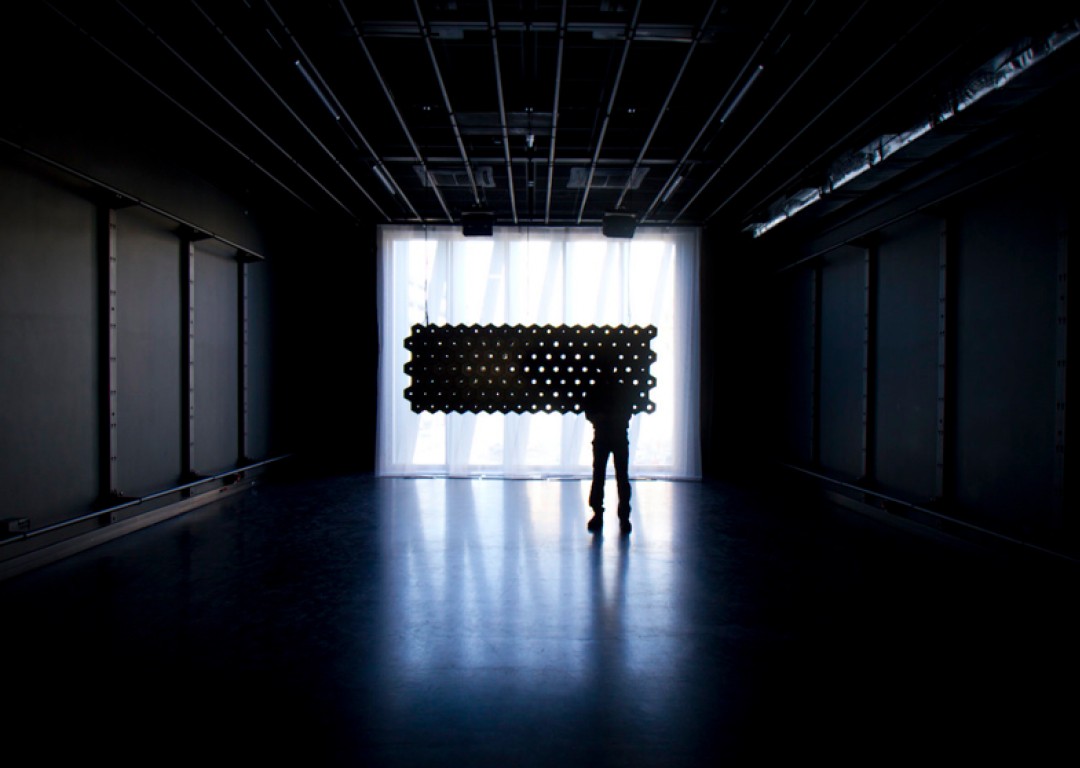

All of a museum’s digitized artifacts are laid out in a large-scale printed grid that organizes them by visual similarity. The immersive arrangement offers viewers the possibility to experience the entirety of the collection at once, and, at the same time, to discover visual and structural patterns within.

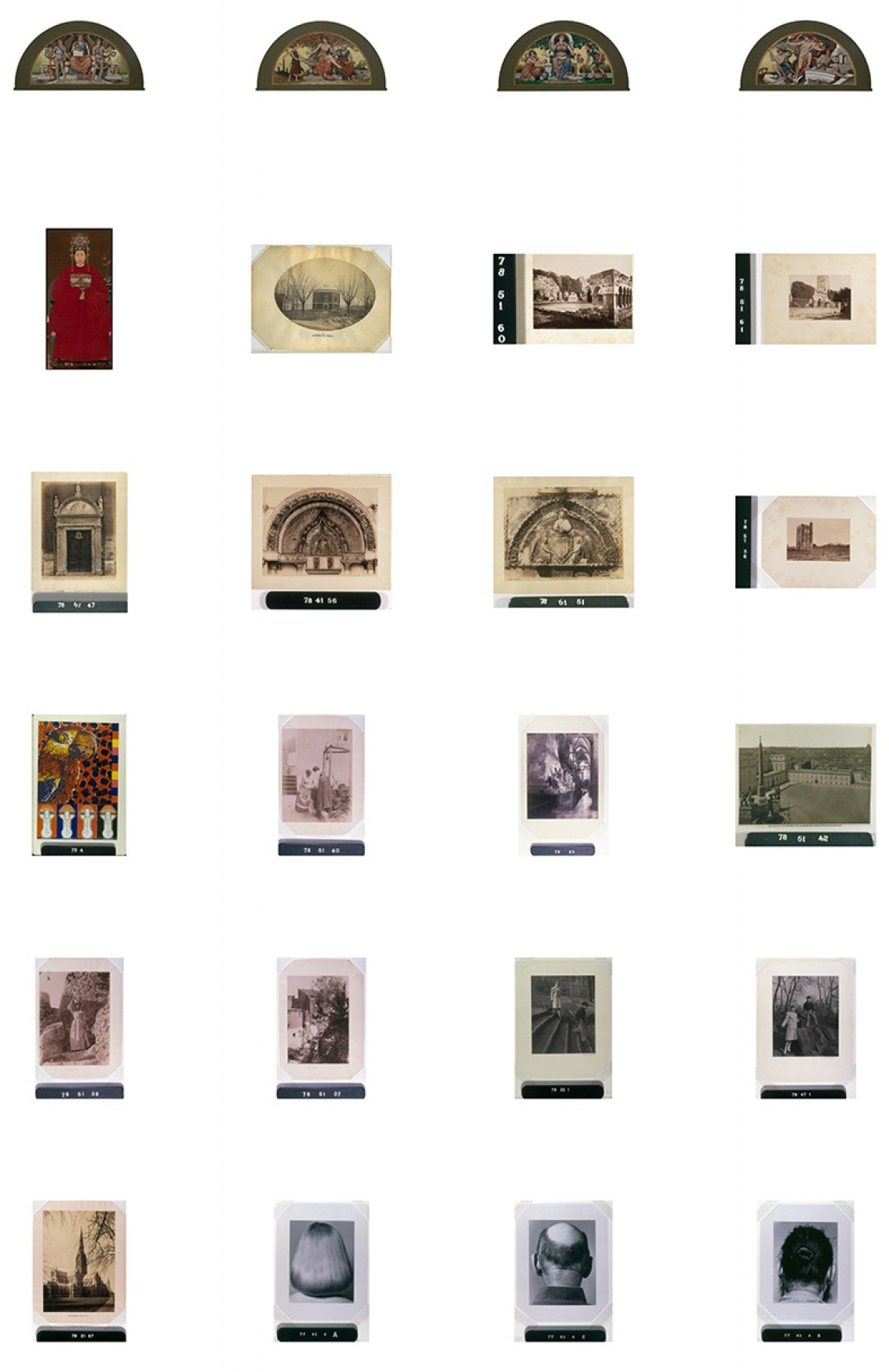

Because of the high resolution of the printed reproduction, viewers can discern details within the displayed artifacts, even at the size of a thumbnail that would hardly be recognizable when viewed on a digital screen. The printed grid becomes a massive, yet engaging index to the collection.

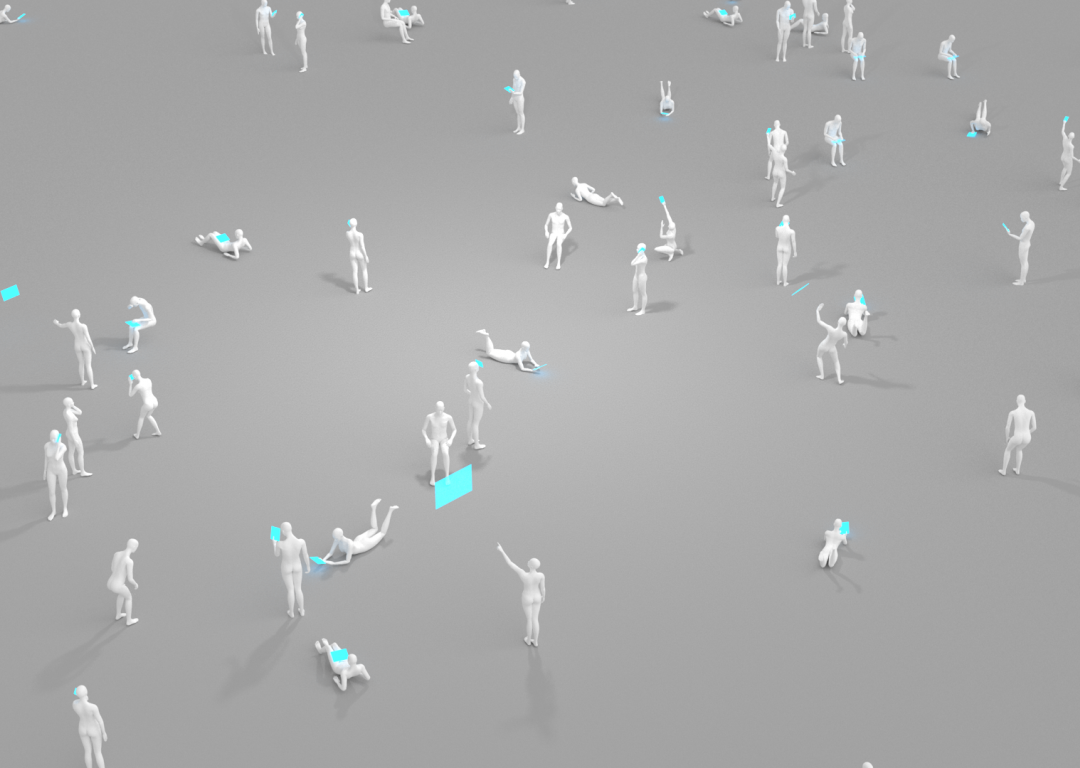

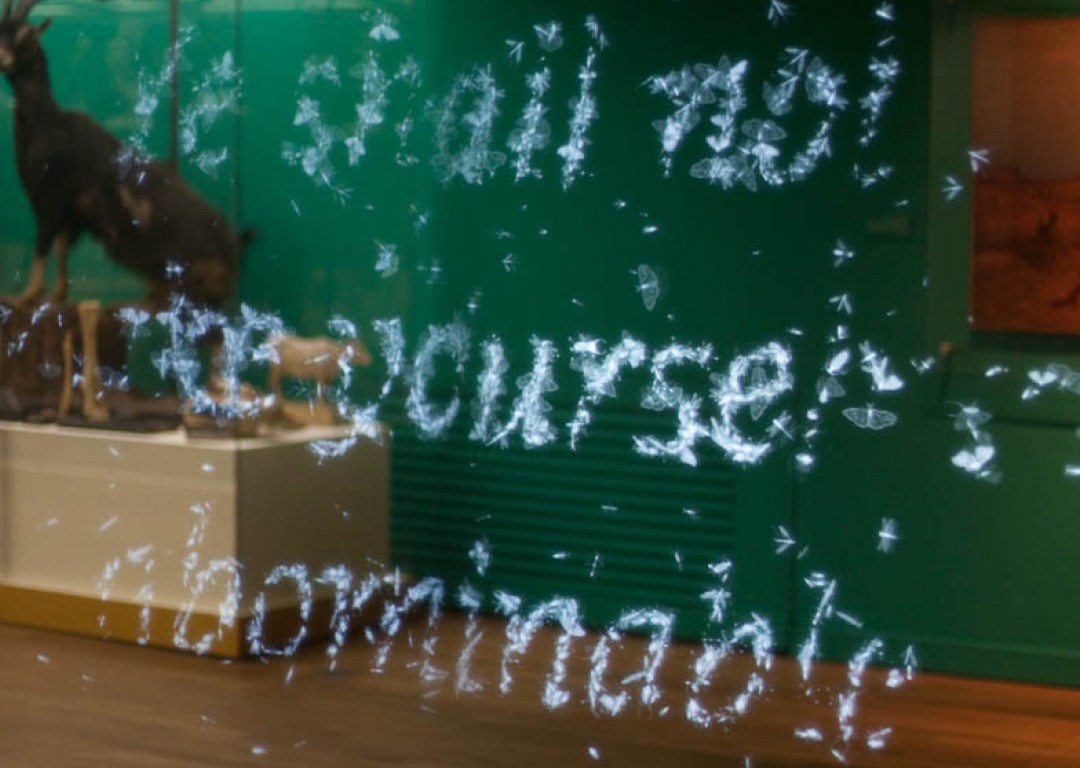

Using augmented reality (AR), viewers become empowered to walk themselves through important aspects of the collection. They can scan any artifact of the grid and a digital layer immediately shows different connections between the artworks in a dynamic way. For example, the AR layer can show all artworks from a specific year, independently of its geographical origins. Or it can show all the works of a particular artist throughout the collection. Viewers can follow curated storylines around topics such as race, gender, provenance on virtual pathlines and walk through these stories in the physical space.

This grid becomes an immersive index of the collection itself. It can exist by itself or complement the museum by engaging visitors to take an unusual perspective and follow first leads given by the index. It is applicable to any digitized museum collection or might even exist as a collection of all global collections.

Because of the high resolution of the printed reproduction, viewers can discern details within the displayed artifacts, even at the size of a thumbnail that would hardly be recognizable when viewed on a digital screen. The printed grid becomes a massive, yet engaging index to the collection.

Using augmented reality (AR), viewers become empowered to walk themselves through important aspects of the collection. They can scan any artifact of the grid and a digital layer immediately shows different connections between the artworks in a dynamic way. For example, the AR layer can show all artworks from a specific year, independently of its geographical origins. Or it can show all the works of a particular artist throughout the collection. Viewers can follow curated storylines around topics such as race, gender, provenance on virtual pathlines and walk through these stories in the physical space.

This grid becomes an immersive index of the collection itself. It can exist by itself or complement the museum by engaging visitors to take an unusual perspective and follow first leads given by the index. It is applicable to any digitized museum collection or might even exist as a collection of all global collections.

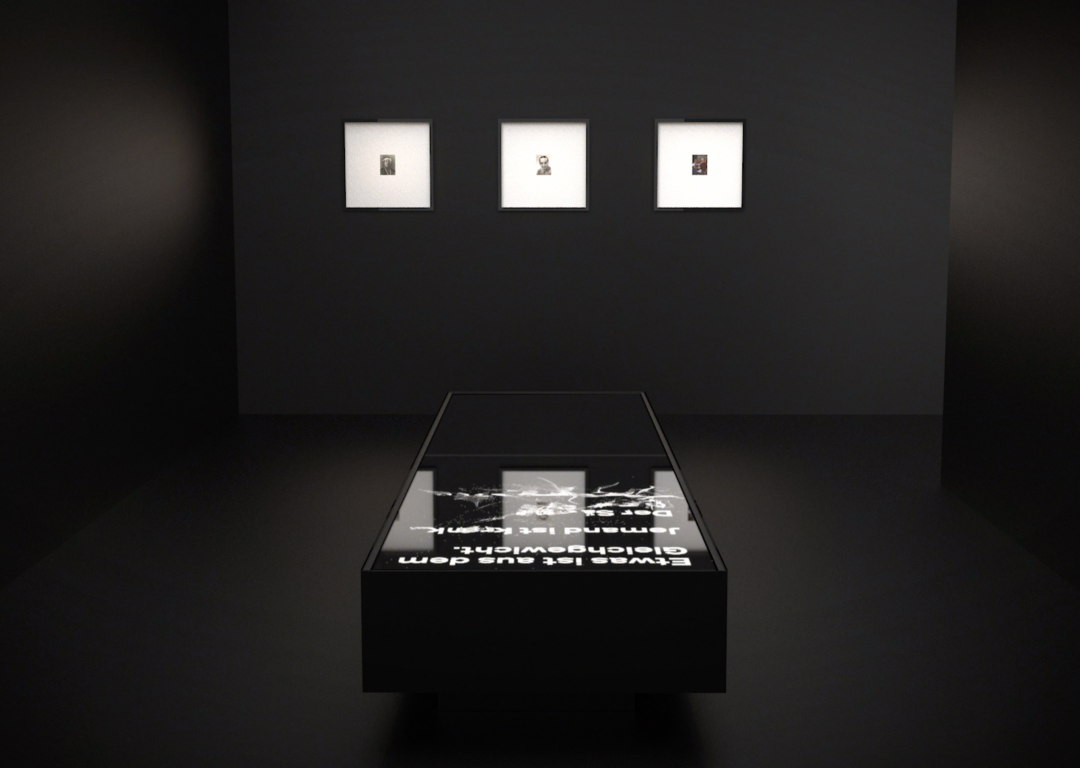

The Archduke Leopold Wilhelm in his Painting Gallery

in Brussels by David Teniers the Younger, 1651

in Brussels by David Teniers the Younger, 1651

The Louvre scene in Bande à part by Jean-Luc Godard, 1964

Prototype of All At Once - Index using more than 13000 images from the digital collection of the Williams College Museum of Art

1. Index

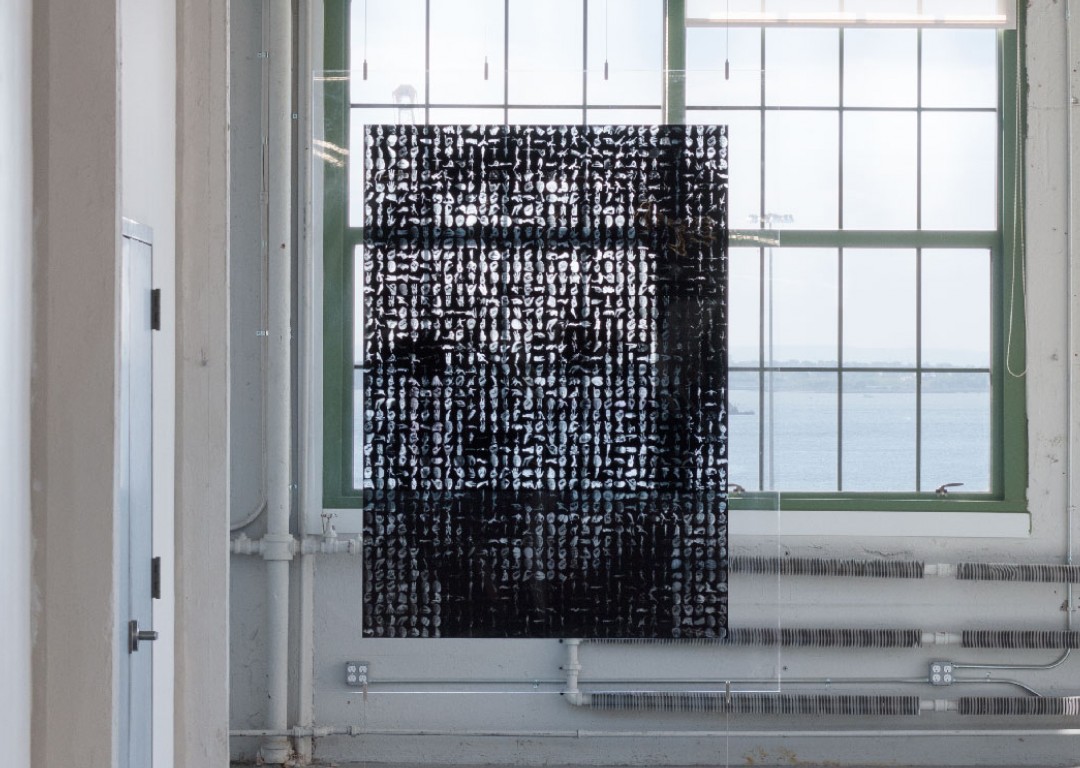

All At Once proposes an algorithmic, data-driven approach to dive into millennia of material culture. It is a design concept and software framework that allows us to comprehend and display encyclopedic museum collections through machine learning technology in ways that were so far impossible.

With All At Once we are able to juxtapose items distant to each other in terms of space and time, or by art history conventions. By displaying these new configurations in immersive environments, viewers can understand and engage with the entirety of a collection beyond the screen of a computer or a smartphone. All At Once thus seeks to redefine the unique experience of visiting a museum building for the digital age.

With All At Once we are able to juxtapose items distant to each other in terms of space and time, or by art history conventions. By displaying these new configurations in immersive environments, viewers can understand and engage with the entirety of a collection beyond the screen of a computer or a smartphone. All At Once thus seeks to redefine the unique experience of visiting a museum building for the digital age.

All At Once is an independent research project by TheGreenEyl. It received funding by the Knight Foundation through the New Museum Incubator New Inc.

The data used was provided by The Met Museum's Open Access Initiative and by Williams College Museum of Art in a collaboration with Micah Walter Studio.

Concept & Design: Frédéric Eyl & Richard The, Machine learning research: Agnes Chang

The data used was provided by The Met Museum's Open Access Initiative and by Williams College Museum of Art in a collaboration with Micah Walter Studio.

Concept & Design: Frédéric Eyl & Richard The, Machine learning research: Agnes Chang

Journey through a collection:

sequence of 1.000 objects from The Met Museum's

digital collection sorted by visual similarity.

sequence of 1.000 objects from The Met Museum's

digital collection sorted by visual similarity.

t-SNE map of 7,500 objects from The MET's digital collection